Last Updated on 14th April 2020

The demand for Safeguarding Information

Recent findings from Ofcom tell us that 83% of adults have concerns about harms to children on the internet (Ofcom, 2019). So, it is no surprise that there’s now unprecedented demand for safeguarding information, advice and guidance from parents, carers and safeguarding professionals.

This appetite for information about online risks and safety tips is reflected in an increase in traditional and non-traditional outlets sharing and or re-sharing information. This has occasionally resulted in major press outlets and leading charities publishing inaccurate and outdated information. While everyone can make mistakes, it seems that too few take the time to properly understand, monitor and credibly report on apps.

Information sharing is central to safeguarding, but the credibility of what is being communicated is critical. It influences how safeguarding professionals and carers respond. If those responsible for safeguarding children don’t get the right information or even worse get the wrong information, the children and young people in their care are likely to be more vulnerable.

Incorrect advice and guidance

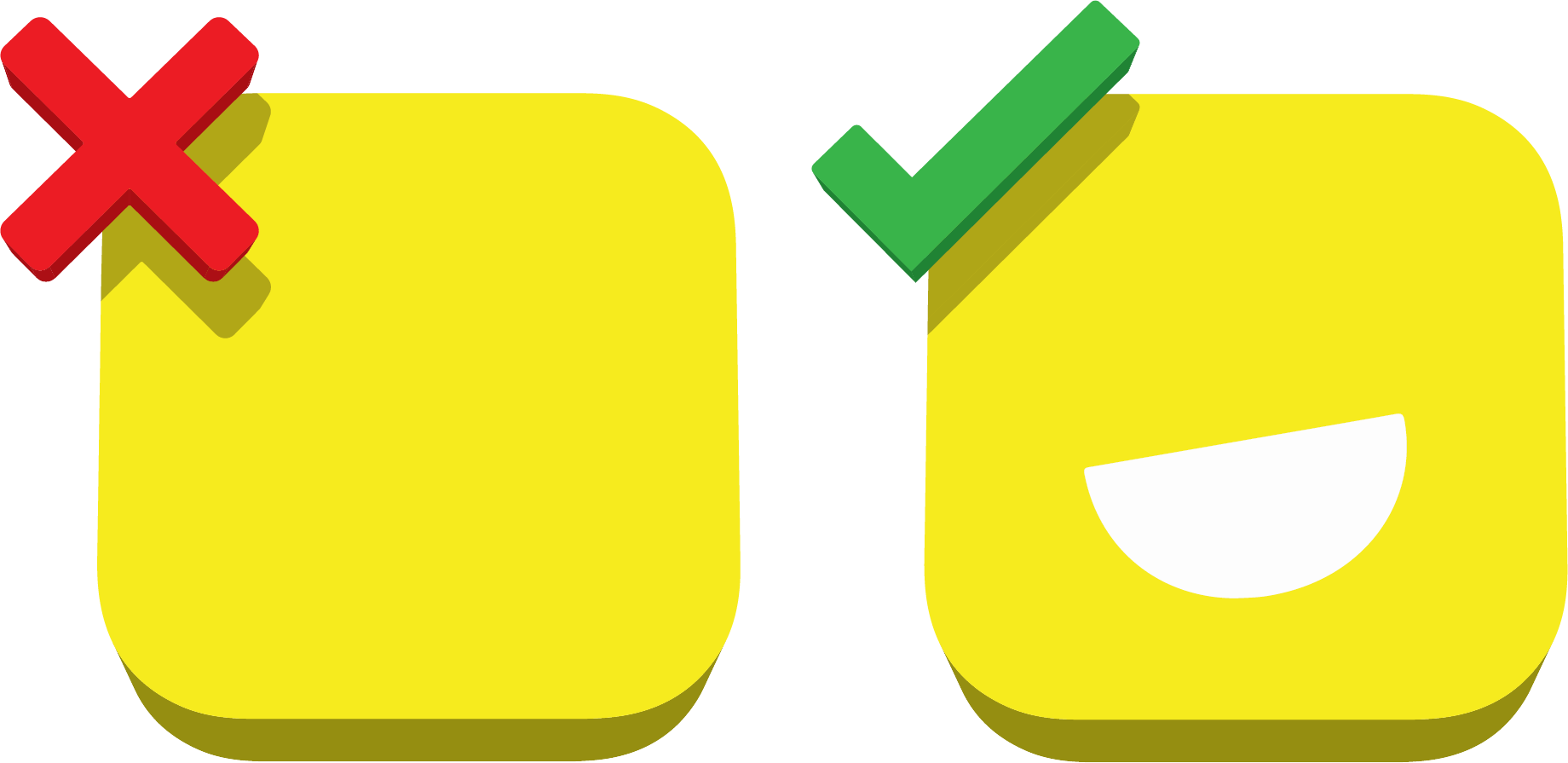

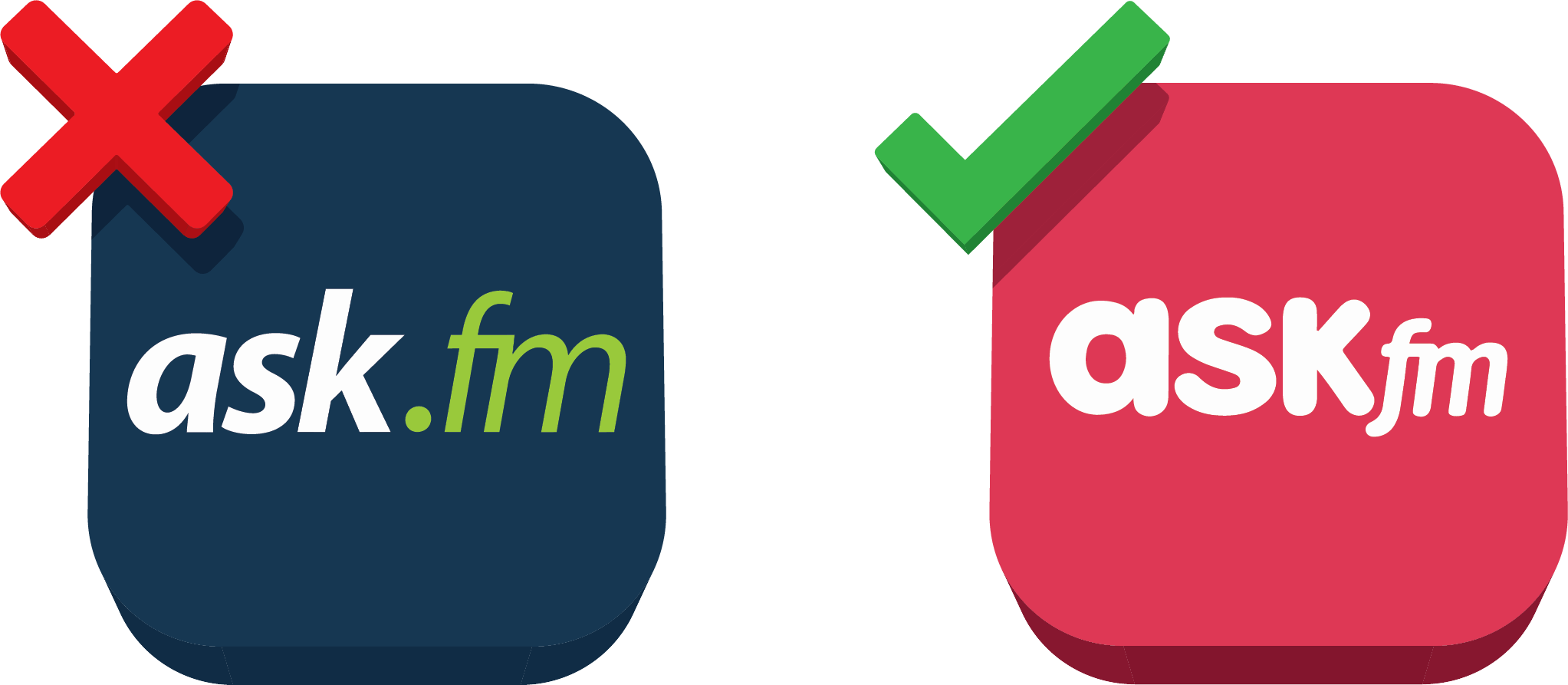

We frequently see out of date icons for high-profile/high-risk apps in articles published by otherwise credible outlets. In simple terms, this means that parents/carers and safeguarding professionals are likely to damage their credibility or miss high-risk apps on a young or vulnerable adults’ device.

Last week, the New York Times published an article, including a graphic from a New Jersey Police notice which depicted ‘popular apps’ parents should monitor. Musical.ly and Live.ly were featured on this list, but both no longer exist, having been merged into TikTok in the summer of 2018. The New York Times is not alone, nor is inaccurate sharing limited to their side of the pond. There are good examples in the UK and Ireland of people (who should know better) sharing safety information well past its sell-by date.

That said, the problem of identifying credible ‘information feeds’ goes beyond charities and news outlets. We have also seen a rise in the number of ‘internet vigilantes’ who are involved in unregulated activity online. Some of whom are raising awareness via sensationalist scare tactics, including shocking live streams and screenshots of abuse.

These practices aim to gain attention and purport to raise awareness in the name of safeguarding – with little understanding of its principles. The risk is that they operate in the absence of vetting, training and any real safeguarding experience.

Getting it right

There’s a clear need for those reporting on risks and platforms online to continue to learn, review and test the platforms before they publish information.

We know how difficult that can be, even with dedicated teams fact-checking and verifying information. A good working relationship with key social media and online industry outlets is of paramount importance. Here at Ineqe Safeguarding Group, we have two active teams committed to monitoring, testing and reporting on new and existing apps that young people use. Our Safety and Content team spend every day keeping up to date with new apps, existing and emerging trends/threats, as well as new safety features and reporting mechanisms. They are complemented by the Learning, Research and Development Team who carry out behaviour-based research as well as monitoring Serious Case and Practice learning reviews, policy papers, reports and academic studies. Both teams work closely with external partners to make sure we are exposed to challenge but most importantly, that our finger remains firmly ‘on the pulse’.

Their work informs our weekly Safeguarding Hub newsletter and Digital Safeguarding in 60 seconds videos. It also supports the work of our regional safeguarding editorial panels across the UK for the content in our Safer Schools partnership App, which provides up to date safeguarding information, advice and guidance to schools across the country.

My message to parents, carers and safeguarding professionals is this; information sharing is at the heart of how we make our children safer. So, make sure that you fact check the veracity of the source you use and the information they supply. And to be clear, don’t just rely on a search engine or algorithm. Google doesn’t do context and, when it comes to making our children safer, the buck stops with you.

Jim Gamble QPM