Last Updated on 26th September 2023

As we get further into 2022, many of today’s top social media apps and platforms have started rolling out new features with the intention of protecting their users. Instagram’s new features mainly focus on screen time limits, account security, and content control – areas that its parent company Meta was accused of neglecting last year.

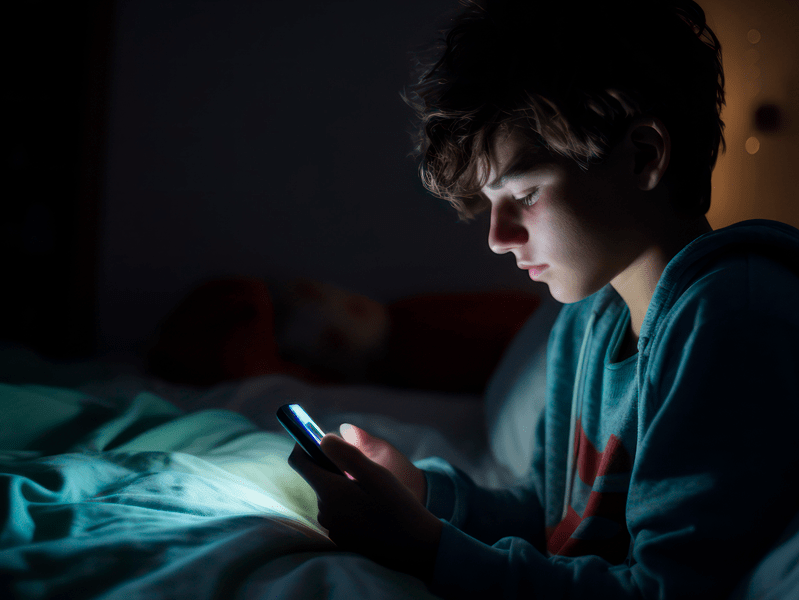

Instagram is growing in popularity with children and young people. This has led to a rise in children under 13 lying about their age to create a profile. Our online safety experts have looked at all of Instagram’s new features to help parents, carers, school staff, and safeguarding professionals make young people safer on the platform.

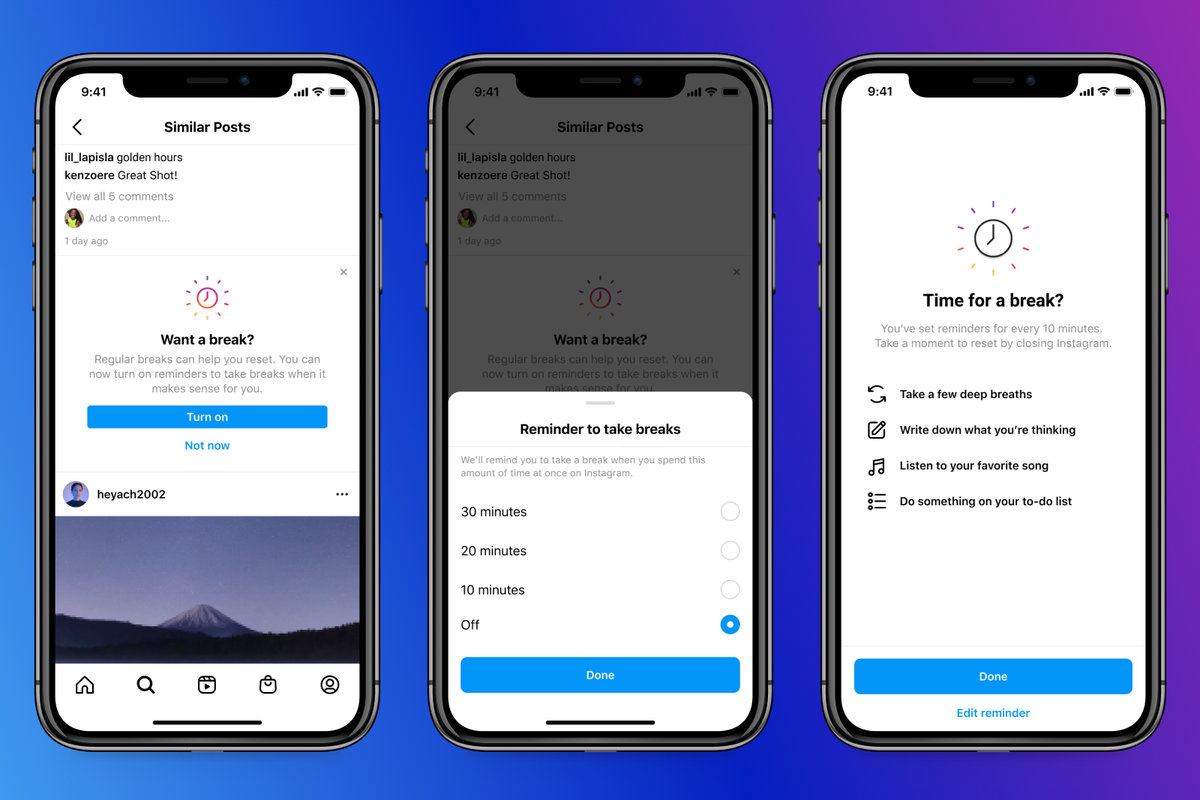

1. Take a Break feature

What is it?

This feature was launched in December 2021 after months of testing. Users can choose to enable a notification system that will interrupt their in-app activity with a ‘Take a Break’ message after a select period: 10, 20, or 30 minutes. This includes a list of possible alternative activities, such as ‘Go for a walk’, ‘Listen to some music’, or ‘Write down what you’re thinking’. Testing showed that 90% of users kept this feature enabled.

Areas of concern

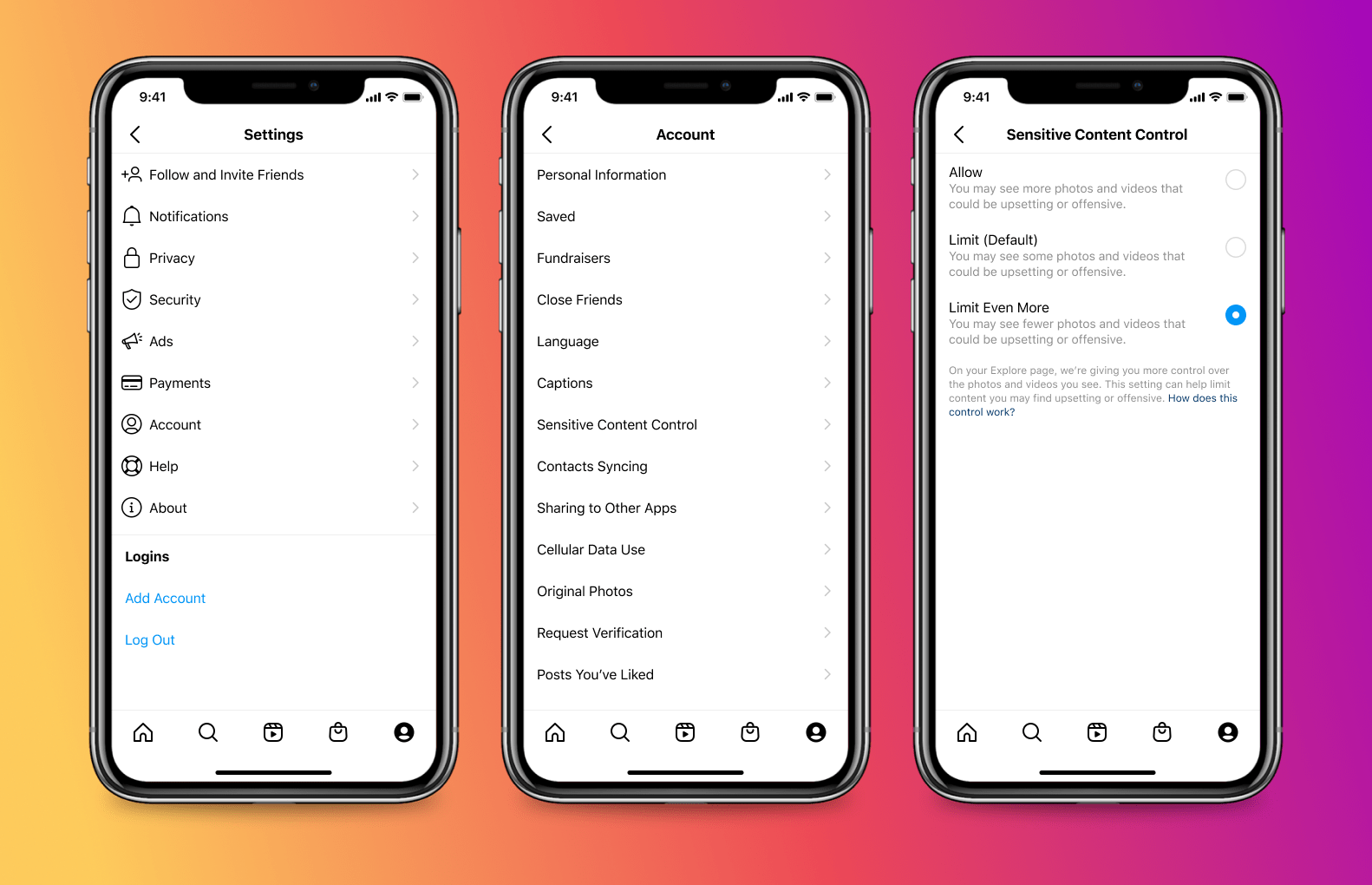

2. Sensitive Content Control

What is it?

This setting allows users to limit and control the type of content they are exposed to on their Instagram ‘Explore’ page. There are three options to choose from:

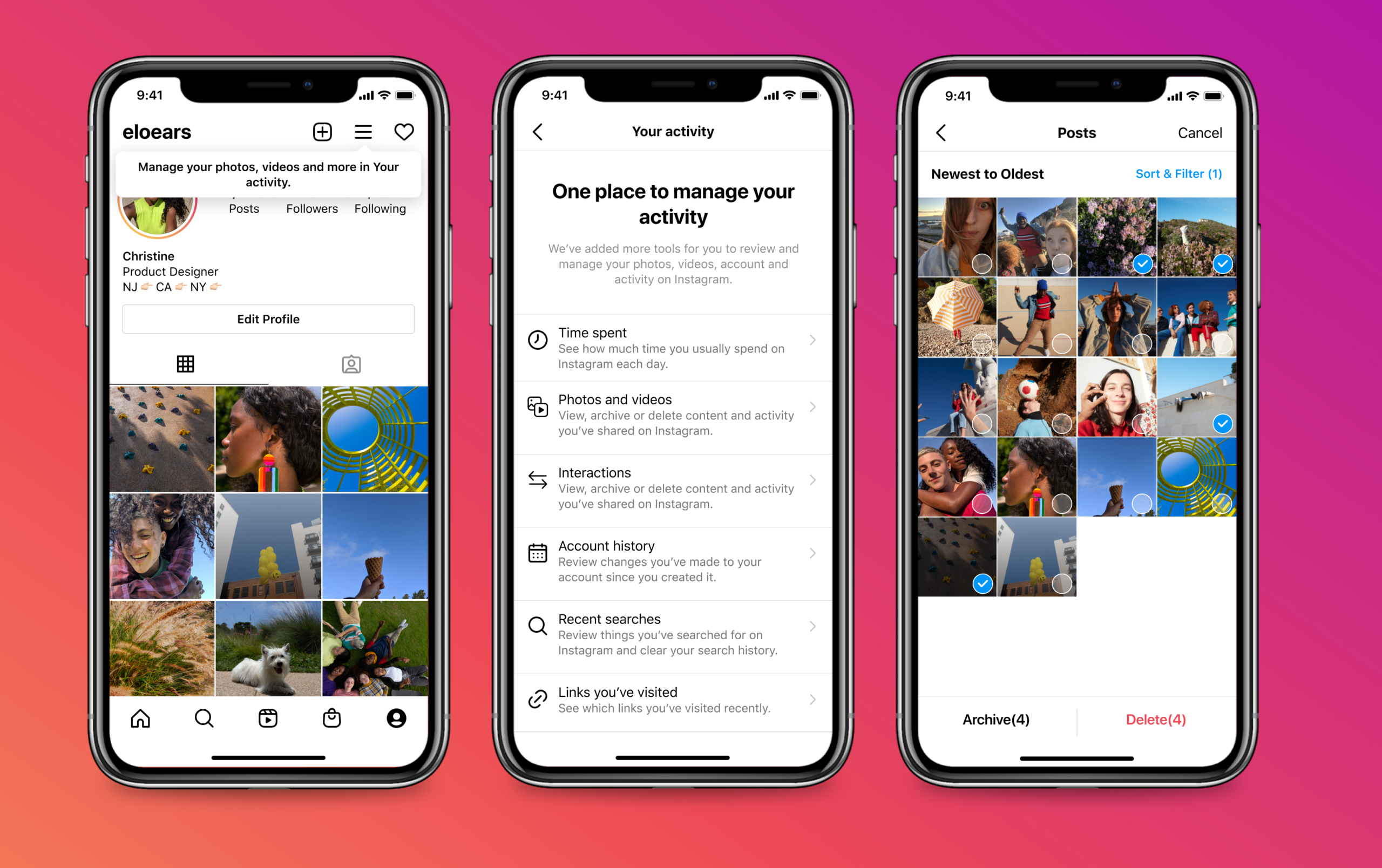

3. Activity Dashboard

What is it?

This feature creates ‘one place to manage all activity’ on the app. The dashboard provides an overview of an individual’s Instagram account activity. It includes time spent on the app, URLs visited, and search history.

Users can also download a copy of all personal information they have shared with Instagram since their account was created. If they would like to delete or archive their posts, they are able to do this in bulk through a checklist on the dashboard.

Potential Risks

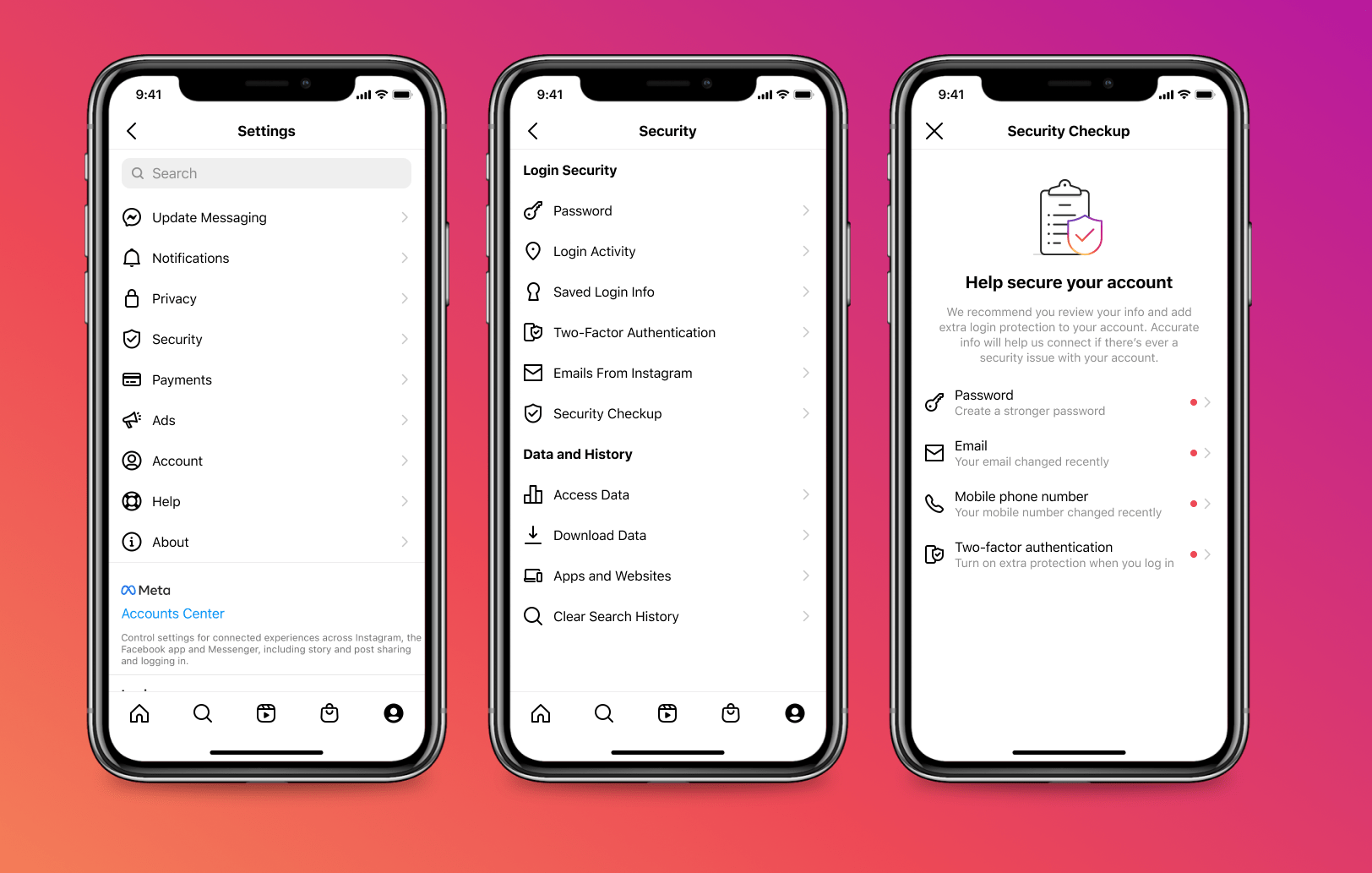

4. Security Check Up

What is it?

The aim of this security check up is to guide individuals through securing their account. It uses step-by-step instructions to:

Areas of Concern

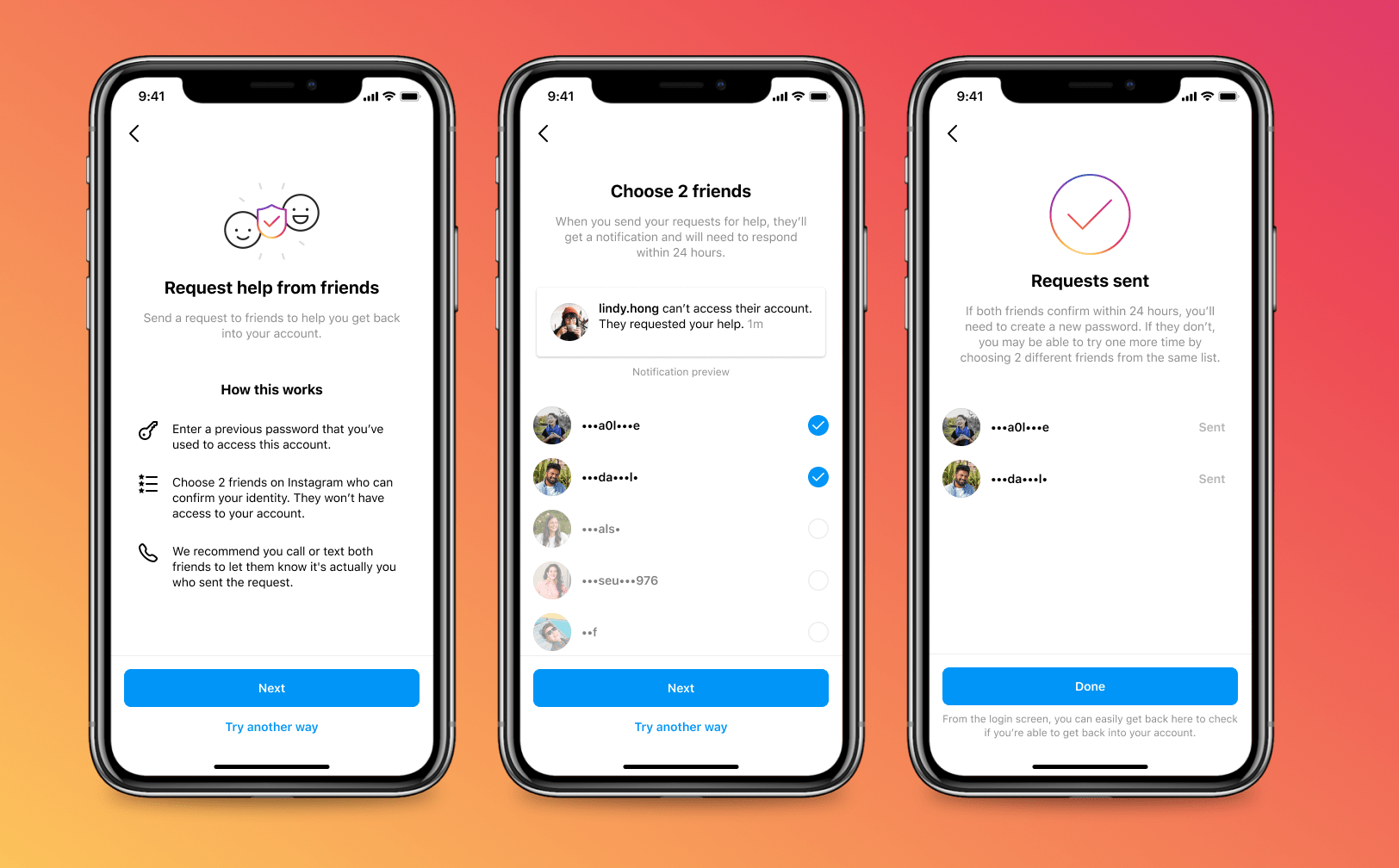

5. Friend’s Help

Status: In development and internal testing

What do we know so far?

This is a new form of account recovery currently in testing. The idea is that a user can choose two friends to confirm their identity after entering an old password they used on their account. Their friends will not have access to their account. Instead, chosen friends will be prompted by an alert on Instagram to confirm the person’s identity within 24 hours. Instagram recommends contacting the chosen friends to tell them it’s a genuine request. If they do not respond, the user will be able to try one more time with two different friends.

As this feature is still in development, our online safety experts were not able to test this feature.

Areas of Concern

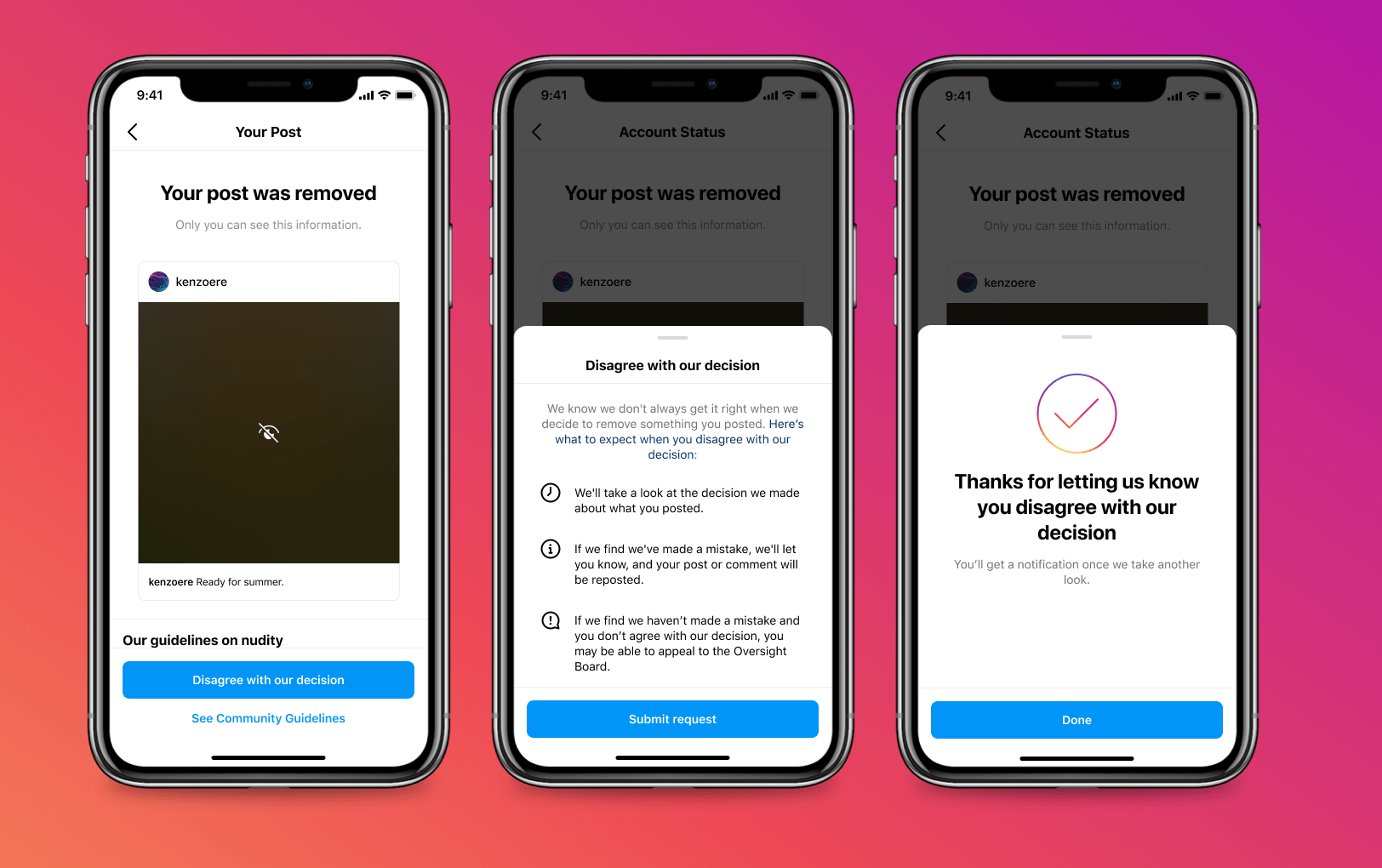

6. Account Status

Feature Status: In testing*

What do we know so far?

This new section will allow individuals to check the status of reports they have made on the app, as well as see any reports or restrictions made to their own account. They will also be notified here if their account is at risk of being disabled. If a young person feels there has been a mistake, they are able to ‘Request a Review’ to have the action appealed by Instagram moderators.

Areas of Concern

*As this feature is still in testing, our current information is subject to change.

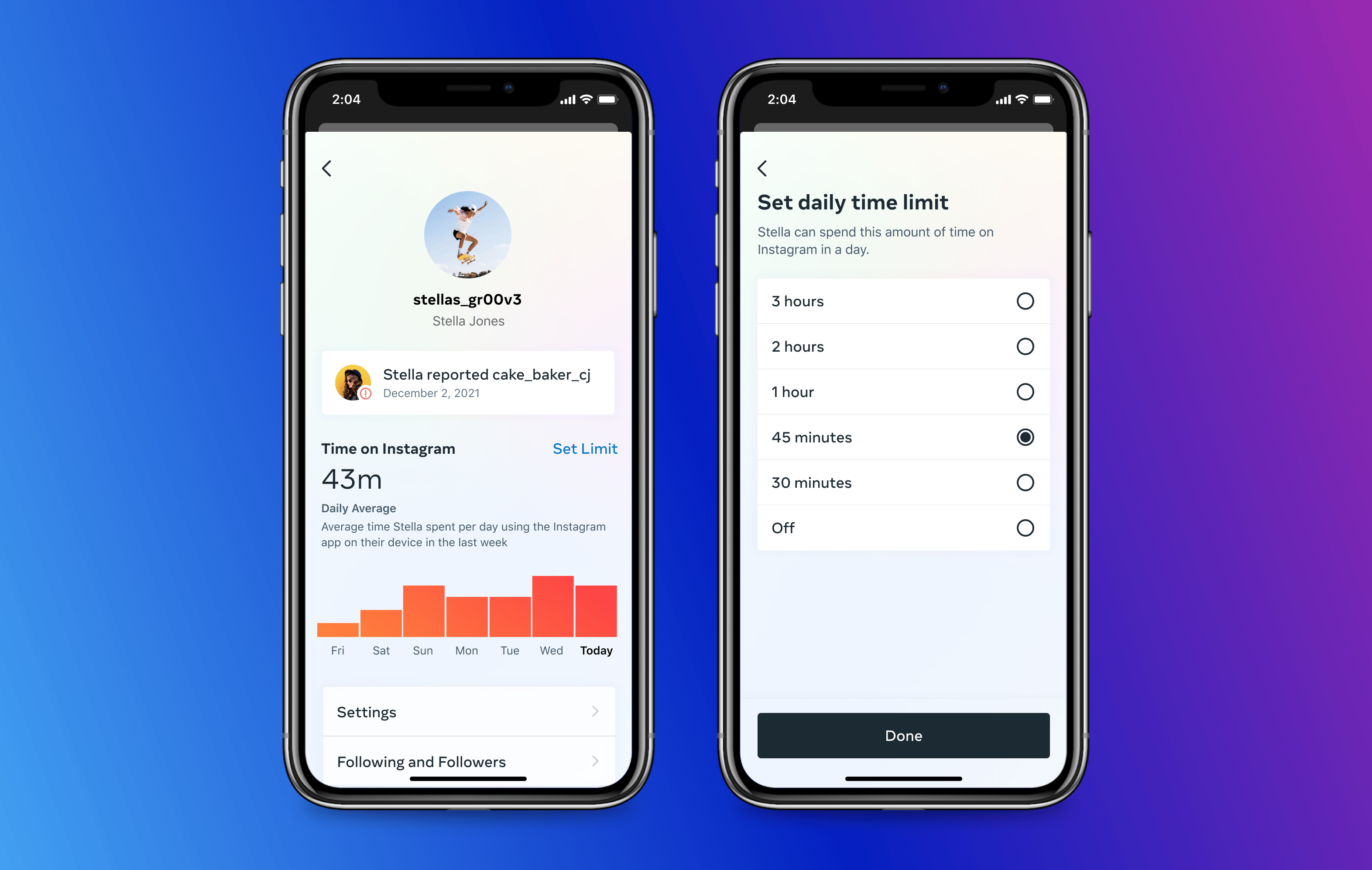

7. Parental Controls

Feature Status: In development*

What do we know so far?

While there is only limited information on Instagram’s plans for this feature, it is clear the platform plans to introduce parental controls. The hope is to eventually allow parents and carers to monitor how much time their young people spend on Instagram and to set time limits. Instagram also plan to release an Educational Hub, which will help parents and carers by giving them tips and tutorials about children’s social media use.

Areas of Concern

*As this feature is still in development, our current information is very likely to change.

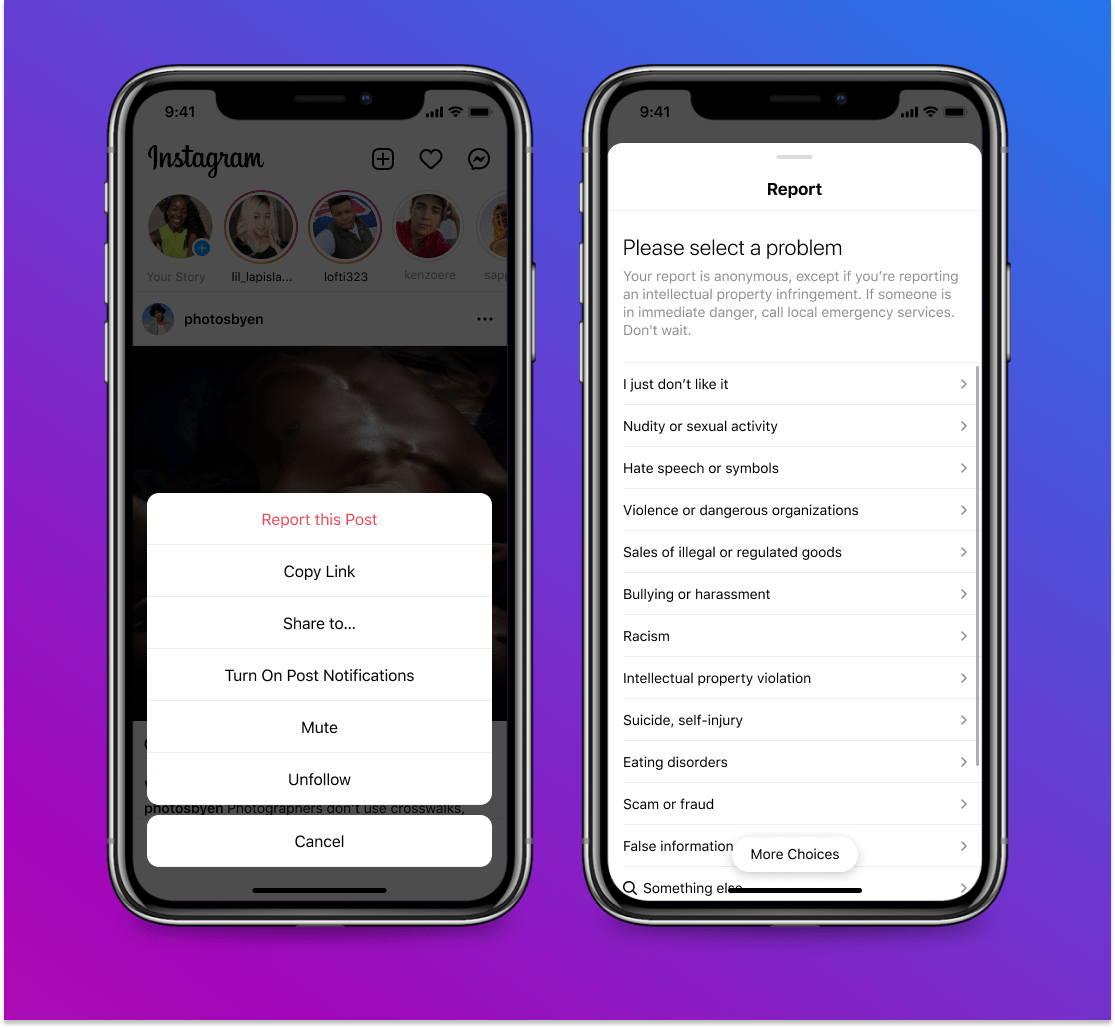

8. Addressing Harmful Content

Feature Status: In development*

What do we know so far?

This new feature may be slightly confusing as it is technically a wider change to how Instagram will work. This change to how content shows up on individual accounts aims to take stronger action against posts with bullying or hate speech. Essentially, if a user reports a post, they will be less likely to see similar posts at the top of their feed.

Areas of Concern

Potential Risks

*As this feature is still in development, our current information is very likely to change.

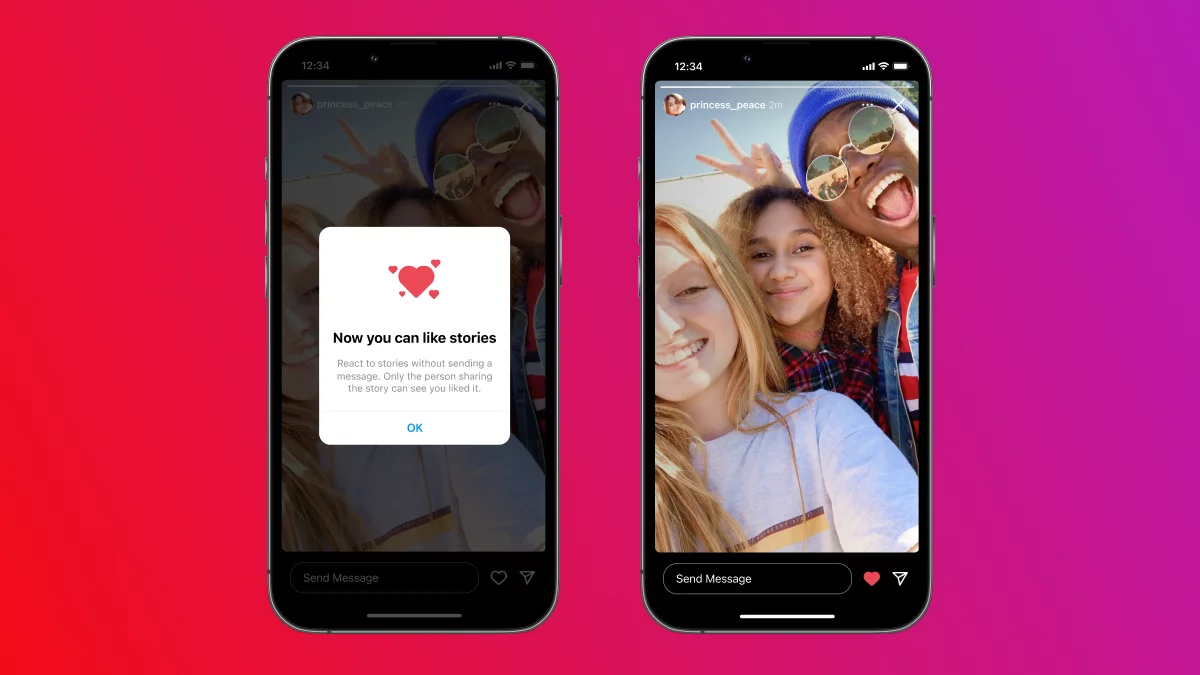

9. Story Likes

Feature Status: Gradual Release*

What do we know so far?

This feature will allow individuals to ‘like’ an Instagram story. Previously, reacting to a story would automatically send a direct message (DM). Now users will be able to react to a story quickly and without ‘clogging up’ someone’s DMs. These likes will not be public. An individual will have to consult the ‘view sheet’ to see who has liked their story and how many likes it has.

Areas of Concern

*As this feature is still in development, our current information is very likely to change.

Source: TechViral

Join our Online Safeguarding Hub Newsletter Network

Members of our network receive weekly updates on the trends, risks and threats to children and young people online.