Last Updated on 13th May 2025

Read Time: 7.6 Minutes

13th May 2025

Children are being introduced to the online world younger than ever before, with Ofcom reporting in 2024 that more children aged 5-7 were present online than previous years. This includes activities such as sending messages, using social media, viewing live streams and gaming online.

With this in mind, it’s crucial to be vigilant when the younger children in your care have access to devices. This involves being aware of what platforms they’re using, what they’re doing on these platforms, and utilising any available safety settings. But what platforms are younger children using the most?

We’ve compiled a guide on a selection of platforms younger children are logging on to, helping you to keep them safer and keep yourself in-the-know.

YouTube / YouTube Kids

A 2024 study found that 21% of children start using YouTube before age 4 and noted that, on average, they use the platform for over an hour a day, nearly 5 days a week. This is a significant portion of a young child’s time, with studies showing that the development of younger children is impacted significantly by the quality of the content they’re viewing.

Behind the colourful thumbnails and intriguing videos, there is an intricate recommendation system – the YouTube algorithm. Basically, this determines what videos they should watch next. This can cause recommended videos to very quickly change from educational to inappropriate, with some videos on YouTube being reported as containing violent or disturbing content.

This is why YouTube Kids is a safer alternative when giving access to the children in your care. Aiming to provide a family-friendly platform with age-appropriate videos, it offers additional and specialised features such as disabling the search feature, restricting viewing to specific ‘collections’ of videos and a timer that limits screen time.

Roblox

One of the most popular gaming platforms amongst children, Roblox attracts over 80 million users daily, with around 40% below the age of 13. While the vast library of over 40 million games is an obvious attraction for children, it also offers social connection, opportunities for creative expression and learning basic coding concepts.

It has come under fire many times for its inability to make the platform safer for children and young people, exposing them to content that has been reported as violent or sexually explicit, while also leaving them vulnerable to groomers who misuse the platform to exploit children.

In an attempt to make the platform safer, Roblox introduced various safety features. These include:

TikTok

Children in the UK are reportedly using TikTok for more than two hours a day, which has more than doubled since 2020. Despite the platform’s policy of users needing to be aged 13 or over, 30% of children aged 5 to 7 are using TikTok according to Ofcom.

The main concerns of TikTok revolve around the ‘For You’ feed, as several studies have found that this personalised feed pushes harmful content towards children. This ranges from content about eating disorders to content that normalises or romanticises suicide. What a user views and interacts with feeds the algorithm, pushing more of that type of content to them and can cause them to get trapped in a cycle of viewing harmful content.

TikTok has some rules in place for different ages:

Discord

Discord is a platform for people with similar interests to connect. It is popular amongst the gaming community as it offers players a way to communicate with each other during gameplay.

According to a 2024 Ofcom report, Discord is continually growing more popular with children aged 5-7, despite the platform having an age requirement of 13 years old. This is likely due to the growth of gaming amongst this age group, as they will seek out a platform for their gaming communication needs.

Discord poses some risks to younger children as many servers on the platform revolve around adult topics, as well as harmful and illegal content being shared amongst servers. Additionally, many people behave differently online than they do offline, so this puts children at an increased risk of bullying, regardless of the person being known to them or not.

If you choose to allow a younger child in your care to use Discord, Family Centre is Discord’s parental control tool and allows you to view:

Other safety settings offered include disabling direct messaging, filtering explicit content and block and reporting features.

What Other Steps Can You Take?

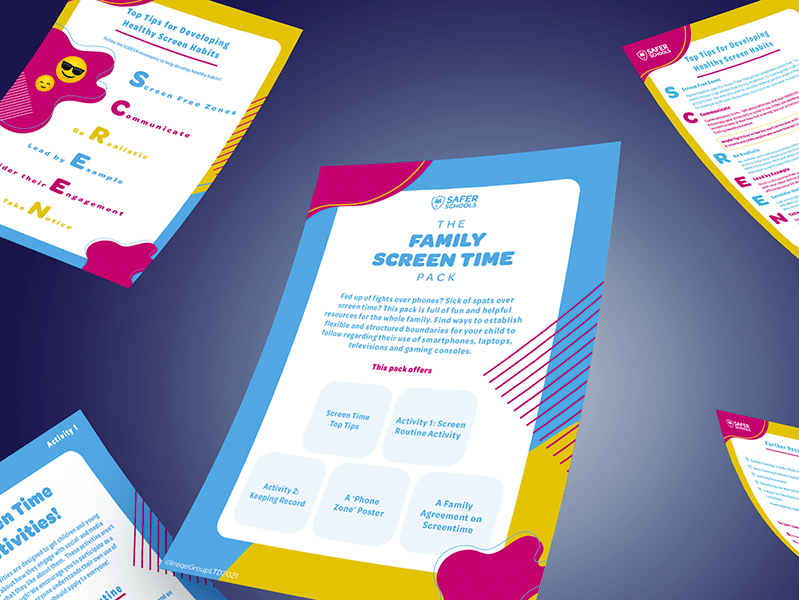

While platform-specific settings offer some amount of safety for the younger children in your care, it is just one element and does not resolve the issue. Take a look at some of our tips for further steps to making the online experience safer for the children in your care.

Utilise Parental Controls

While they can’t ensure that a child won’t encounter harmful content, safety settings will minimise the risk and put measures in place to make a platform more age-appropriate and safer.

Explain to the child or young person in your care why you have chosen the selected restrictions and come to an agreement as this will help them feel involved and in control. If they feel that you have breached their privacy and taken over their device without consent, it may lead them to lie about their online habits.

As the child or young person gets older, you may wish to alter the restrictions, so review them frequently.

You can use Our Safety Centre for help with platform-specific settings.

Have Open Conversations About Online Activity

Take time with the child or young person to talk about their online habits in a positive environment that is free from judgement. Ask open-ended questions like, “what games do you like playing?” or “what sort of stuff do you like seeing online?”. This will encourage them to be more honest about their habits and come to you if they have questions.

Ensure they know who their trusted adults are if they need to discuss something they have seen online that is harmful or has made them uncomfortable.

Check Their Age

Ask the young person to show you that the date of birth they have submitted to any profiles they have created is correct. If their age is incorrect, safety settings won’t be enabled appropriately.

When a child or young person in your care turns 13, this is the age that they can access most online platforms. Review their online profiles to ensure their age is correct – they may have previously lied about their date of birth to gain access to a platform earlier. If their age is incorrect, safety settings won’t be enabled appropriately.

Further Resources

The Friend Ship

An Adventure in Cyber Space

Embark on a cyber space adventure with ‘The Friend Ship’, a children’s book designed to help parents, carers, and safeguarding professionals plant seeds of safety in young minds. Join Jack and his dog, Freddie on a journey through app age restrictions and friend requests as they decide who to let onboard and when to call the Mothership for guidance.

Join our Online Safeguarding Hub Newsletter Network

Members of our network receive weekly updates on the trends, risks and threats to children and young people online.