Last Updated on 24th November 2022

You may have heard about the Online Safety Bill – and if you haven't, it's likely you soon will!

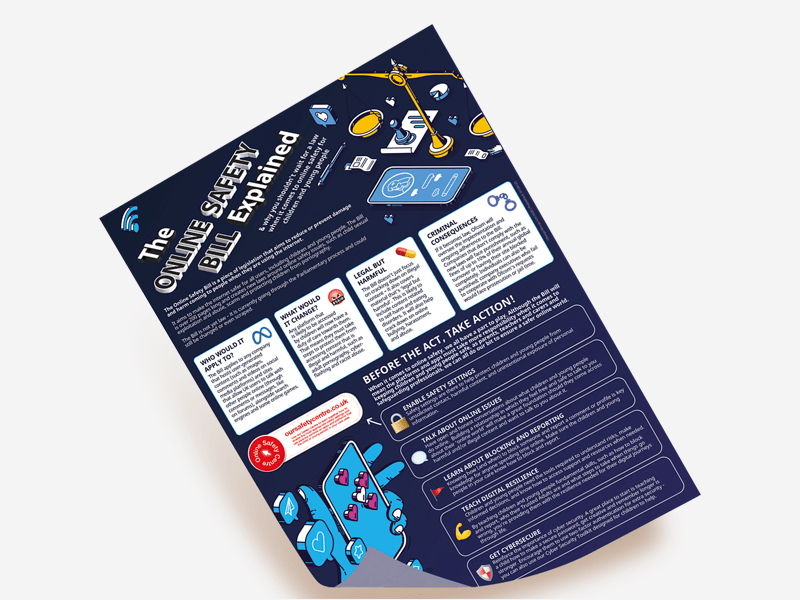

The Online Safety Bill marks one of the biggest planned changes to date in the way online tech companies are held responsible for content that appears on their platforms. It's specifically aimed at companies that host 'user-generated content' (meaning those which allow users to post their own content online) and search engines. That's companies like Facebook, YouTube and Google.

With 225 pages full of clauses and legal speak, the Online Safety Bill is not an easy (or fun!) read for most. That’s why we’ve created this guide to the Online Safety Bill, alongside some tips on how we can all work together to make children and young people safer online.

What Is the Online Safety Bill?

The Online Safety Bill is a piece of legislation that aims to reduce or prevent damage and harm coming to people when they are using the internet.

It aims to make the internet safer for all users, including children, and creates new laws around issues such as child sexual exploitation and abuse, terrorism, scams and protecting children from pornography.

The Bill applies to any company that hosts user-generated content (such as images, comments and videos) and sites that allow UK users to talk with other people online (through comments or messages, like on forums). This means it will apply to sites such as:

It was originally called the Online Harms Bill and originated from the Online Harms White Paper, a U.K. government document outlining plans to become a world-leader when it comes to keeping users safe online.

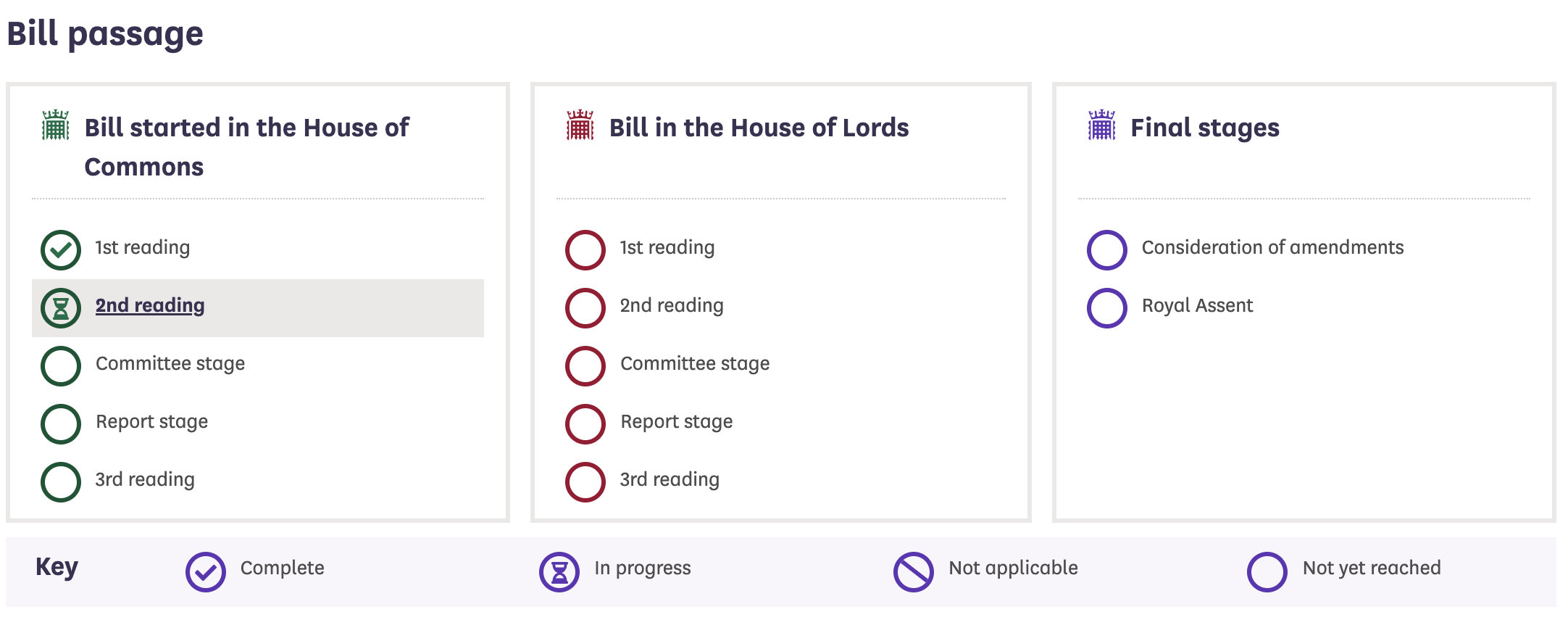

Since then, the Bill has gone through many changes and iterations. As it continues to move through the parliamentary process of becoming law, there have been many additions to what the Bill should cover. For example, in March 2022, the criminalisation of cyberflashing and the addition of robust age verification measures for sites that host or publish pornography were both added to the Bill.

If it becomes law, Ofcom will oversee the implementation and ongoing adherence to the Bill. Companies that don't comply with the new laws could face punishment, such as fines of up to 10% of their annual global turnover or having their site blocked completely.

Individuals can also be punished; company executives who fail to cooperate with Ofcom's requests would face prosecution or jail time.

What’s In the Online Safety Bill?

The Online Safety Bill covers two main areas related to two different groups of people: content that is harmful to adults and provisions to protect children. For this article, we're focusing on the latter.

Any platform that is likely to be accessed by children will now have a duty of care towards children and young people. That means they must take steps to protect them from accessing content that is illegal and harmful.

However, the Bill doesn't just focus on cracking down on illegal content – it also covers content that's 'legal but harmful'. This is likely to include:

Some have been critical of this, as what's defined as harmful can be subjective. Some content which may be viewed as harmful may be helpful to some individuals – for example self-harming content may be used by some young people to prevent them from engaging in the act.

Platforms will have to assess the threats to the safety of children. If risks are identified, they are legally required to act.

The Bill will also mean that if harmful content is seen, it must be easy to report. Platforms will also have a duty to report child sexual exploitation and abuse content to the National Crime Agency.

Other areas covered by the Bill include:

This list is not exhaustive and, as the Bill has not been passed yet, there is still scope for more offences and obligations to be added before it comes into law. However, this guide to the Online Safety Bill highlights some of the most important and relevant aspects of the Bill when it comes to safeguarding children online.

What’s Been the Response to The Online Safety Bill?

The Online Safety Bill has faced criticisms, with some saying it goes too far and others believing it doesn't go far enough. Groups such as the Big Brother Watch and the Open Rights Group have warned that the Bill could remove protections for private citizens and even put children at risk because of issues around the use of end-to-end encryption.

Other organisations, such as The Samaritans, think the Bill doesn't do enough to ensure protections from suicide and self-harm related content and that this type of content will only be moved to less prolific sites. The NSPCC has said the Bill falls short of what is needed to protect young people and has doubts over areas such as the effectiveness of the child abuse response, weaknesses in proposed child safety duties and the strength of enforcement measures.

Does the Online Safety Bill Change What I Need to Do?

Unless you're running a tech company, most likely not. The whole point of the Online Safety Bill is that the onus is on the tech companies themselves to 'step up to the safeguarding plate' and make changes – or face the consequences.

For safeguarding professionals, legal duties will remain the same – the Bill doesn't remove responsibilities or mean safeguarding children online is put into the hands of the tech companies instead. Just as a whole-school approach should be taken when it comes to safeguarding, the Bill would in theory become part of the ecosystem; everyone must engage with the process and carry out their role in keeping children and young people safer online.

Everyone has a part to play in keeping children and young people safer online. Make sure you’re subscribed to receive our Safeguarding Alerts to help safeguard children in today’s digital world.

Join our Online Safeguarding Hub Newsletter Network

Members of our network receive weekly updates on the trends, risks and threats to children and young people online.